Turn On, Tune In, Transcribe: U.N. Develops Radio-Listening Tool

People listen to the radio as the results of the presidential elections are announced in Kireka, Uganda, in February. Many rural Ugandans don’t have Internet access, and the radio is a central source of news — and platform for citizens’ opinions. Isaac Kasamani/AFP/Getty Images hide caption

toggle caption

Isaac Kasamani/AFP/Getty Images

Voice recognition surrounds tech-loving Americans, from Siri to Google Assistant to Amazon Echo. Its omnipresence can make it easy to forget that making this technology has been really, really hard.

Understanding human speech is one of the most difficult frontiers in machine learning, and the biggest names in technology have devoted much time and money to conquering it. But their products still work for only a handful of languages.

Less prominent languages are still indecipherable to computers — even for text translations, let alone voice recognition.

The United Nations is working to change that, with an experiment in Uganda building a tool that can filter through the content of radio broadcasts. The ultimate goal: to involve more voices of rural citizens in decision-making about where to send aid or how to improve services.

The inspiration for the tool came from projects that use social media to identify citizens’ concerns — for instance, what concerns people have about an immunization drive, or how often they suffer power outages. That kind of work is pretty typical for the U.N.’s data analysis initiative, called Global Pulse.

But at the Global Pulse lab in Kampala, Uganda, social media analysis wouldn’t work, says lab manager Paula Hidalgo-Sanchis — especially if the U.N. wanted to listen to rural voices. In rural Uganda, Internet access is limited, so few people use social media — but nearly everyone has a cellphone, and call-in talk shows are popular.

“What people use to express their opinions is radio,” Hidalgo-Sanchis says.

Listening in languages Siri doesn’t speak

The U.N. estimates some 7.5 million words are spoken on Ugandan radio every day, across hundreds of stations.

It’s more than a small team of humans could possibly monitor. But if the U.N. had a particular topic in mind — say, disaster response — why not run the broadcast audio through a speech recognition program, then search the transcript for key terms, like “flooding,” to learn what aid is needed where?

Listening in was easy: Small devices using Raspberry Pi, a low-cost computer, could tune in to the broadcasts. But it took two years to figure out the transcription.

The U.N. worked with academics at Stellenbosch University in South Africa to teach computers three languages — Ugandan-accented English, Acholi and Luganda — that weren’t served by any existing speech-recognition programs.

No one has much incentive to develop models for languages like these, says Stellenbosch professor Thomas Niesler, who worked on the project with two post-doc researchers. For companies, there’s no market that justifies the investment. For academics, it’s not regarded as cutting-edge (and publishable) work, because the process is basically the same as what’s been done for other languages — albeit with a tiny fraction of the resources.

“For very good speech recognition, you need lots and lots and lots of audio,” Niesler says, all of it paired with an exact transcription.

“Companies like Apple and Google will have tens of thousands of hours of English which have been annotated in this way,” he says.

For the three languages the U.N. was interested in, the team managed to transcribe just 10 hours per language.

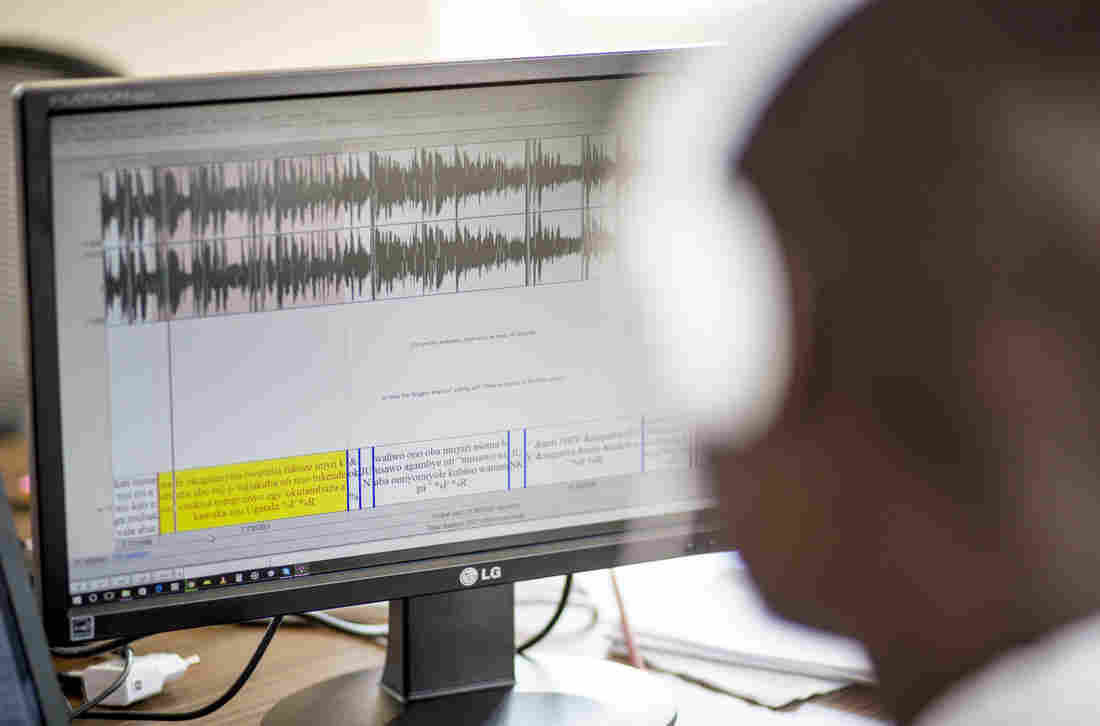

A staffer at Pulse Lab Kampala works on the development of the radio analysis tool. To build the models necessary for speech recognition, hours of audio in each language had to be carefully transcribed. Pulse Lab Kampala hide caption

toggle caption

Pulse Lab Kampala

A billion words vs. the Bible

The work only began there. Then the team needed to create a list of words and their pronunciations, to help teach the computer how individual sounds form the words it needs to recognize.

But just knowing sounds and words isn’t enough to accurately transcribe spoken language — you also need a bunch of text to help the computer recognize the likely word pairings rather than all possible ones.

“One of the things that really helps speech recognition is knowing which words tend to follow other words,” explains John Quinn, a U.N. data scientist who led the Pulse Kampala team on the project.

For a computer to learn which words to expect together, it needs to process lots and lots of sentences. In English that’s not a problem: Google has a database with more than 1 billion words, for instance.

For Acholi, on the other hand, “There was almost nothing,” Niesler says. Languages like these, without many transcriptions, word lists or existing texts, are known as underresourced — and they are among the trickiest to teach computers.

“I think our major resource was actually a translation of the Bible,” Niesler says. “And you can imagine the language which is used there diverges a lot from the language that’s used on the radio.”

The team fed the computer its manually created transcripts in addition to the Bible and other small amounts of text. When team members first ran the transcription program, it got only about two words in 10 correct, Niesler says.

The researchers worked to improve the model despite the lack of data. Eventually they reached an accuracy rate of at least 50 percent for all three languages, and up to 70 percent in some cases.

It’s tough, but not impossible, to make sense of texts with so many inaccurate words. “It’s a bit like social media analysis, but imagine we joined up everyone’s tweets into one big mass and then randomly changed 30 percent of the words to other words,” Quinn says. “That’s kind of what we’re looking at.”

Even 50 percent accuracy is enough to allow for topic recognition, Quinn says — that is, the computer can tell which conversations are relevant, even if human ears have to determine what was actually said.

‘There’s no precedent on how to do it’

The U.N. now has a prototype that works, from a technological point of view — it can listen to the radio streams and flag when a given topic comes up. Now they’re using pilot projects to see if it works from a development point of view — that is, whether the radio analysis is helpful.

Jeff Wokulira Ssebaggala, the CEO of a media and Internet freedom nonprofit called Unwanted Witness, says there’s no shortage of radio stations, but the U.N. might not find much meaningful conversation. “It’s [a] ‘quantity without quality’ phenomenon due to political interference,” he says. “Media houses are promoting self-censorship for fear of government reprisal when it comes to real issues affecting citizens.”

Lindsey Kukunda, a journalist and digital safety proponent who has worked for radio stations across Uganda, says she doesn’t doubt interesting conversations are happening on radio stations.

“In the rural areas radio is everything,” she says. “People actually participate, and with serious topics. … If you want to know what’s going on in the rural areas, then connecting to radio stations is definitely the way.”

But the details of the radio-monitoring project gave her pause — raising concerns about copyright, the possibility of spying and, especially, individual privacy.

“The virtual world never forgets,” she says, noting that a digital record might stick around long after the speaker changed his or her mind on an issue.

“And it’s harder when your voice is caught on radio. You know how they say Africa is a small place? Uganda is a very small place,” she says. “Someone can hear your voice and know you, for real.”

In response to such concerns, U.N. data scientist Quinn says the system is designed to keep only conversations that are flagged as relevant, and to only release them in aggregated, anonymized form — as an idea or a trend, instead of actual audio clips.

Lab manager Hidalgo-Sanchis also says the pilot programs are actively evaluating risks, including legal implications and how to protect the system itself from being stolen or misused. How the tool might ultimately be deployed and who would have access to it won’t be decided until there are data on whether it works, she says.

“This is something new, something that has never been done,” she says. “There’s no precedent on how to do it.”

Smaller, faster, messier

As the U.N. tests out the first prototype, the speech-to-text team is tackling another challenge.

It took years of work to develop the three new language models. But what if the U.N. wanted to filter through rural radio conversations in a different language — say, in the aftermath of a natural disaster?

There wouldn’t be time for new dictionaries and hours of transcribed audio. But maybe, Quinn says, you could build a model that only identifies the key phrases you’re interested in.

It’s actually harder to teach a computer to recognize a few words than to teach it an entire language. It all goes back to word order: If your model only knows a few phrases, you can’t use the words around them to help make sense of the audio.

But if such a system works even a little bit, it could still be helpful, Quinn says.

“This is the opposite direction of what most of the big labs are doing,” Niesler says. “There, [they] get more data and get more data and … learn as much as possible upon these huge resources. So we are going the other direction: We’re saying, we have less data and we have less data, and how well can we still do?”

That’s still an open question. But regardless of the future progress, Hidalgo-Sanchis calls the project so far a “milestone” in data analysis.

“It’s what artificial intelligence is bringing,” she says. “It’s transforming and then making usable data that we never thought it would become digital data. I think that’s really something.”